Generative AI: basic guidance

Overview

This page provides guidance for using Generative Artificial Intelligence (Gen AI) tools for the NSW Government. It outlines responsible, safe, and ethical practices for working with these tools and builds on the existing NSW government guidance on artificial intelligence.

Applications across NSW Government are rapidly integrating AI. Staff must be aware of generative AI, stay informed and remain vigilant. Portfolio of Agencies and industries are enhancing their policies and procedures for Generative AI so that specific, business-aligned instructions and rules are in place. If your Agency is yet to develop guidelines, or if in doubt, refer to this guide.

This is part of a series of guidelines related to the field of generative AI that we will continue to deliver.

This guideline does not cover:

- Sourcing and deployment

- Governance and risks management

- Policies and standards

What is generative AI?

Generative AI (Gen AI) is a wide-ranging term that refers to any form of artificial intelligence capable of generating new content, including text, images, video, audio, or code.

Easily accessible examples of Gen AI include ChatGPT (OpenAI), BardAI (Google), MidJourney, and CoPilot (Microsoft). These tools allow individuals to input text and receive AI-generated content. They offer functionalities such as summarising lengthy articles, providing concise answers to questions, or generating code snippets for described functions.

Why should you care?

This technology is rapidly being integrated into many of the applications we commonly use.

Gen AI presents a promising opportunity to enhance the public sector workforce by supporting everyday tasks such as writing, copywriting, research, Q&A, and more. The NSW government is actively exploring these opportunities, and people should be curious about its benefits.

However, it's essential to consider the risks associated with Gen AI. While Gen AI excels in answering questions and presenting information engagingly, its accuracy remains to be determined. The technology sometimes encounters a phenomenon known as 'hallucination,' confidently producing nonsensical or off-topic information.

Gen AI’s performance varies depending on the task and language. This technology learns from extensive internet data, which might inadvertently introduce biases, favouring user groups or perpetuating stereotypes.

How do you know if you are using it?

If you ask questions or give instructions within a designated input area (typically known as a prompt), creating text, images, video, or even voice responses, chances are you're utilising Gen AI. This technology is apparent in well-known products like OpenAI ChatGPT and Microsoft BingAI.

"What are the three essential sections that should be included in a government policy paper? Provide the section heading only."

- Executive Summary

- Policy Analysis and Rationale

- Implementation and Monitoring

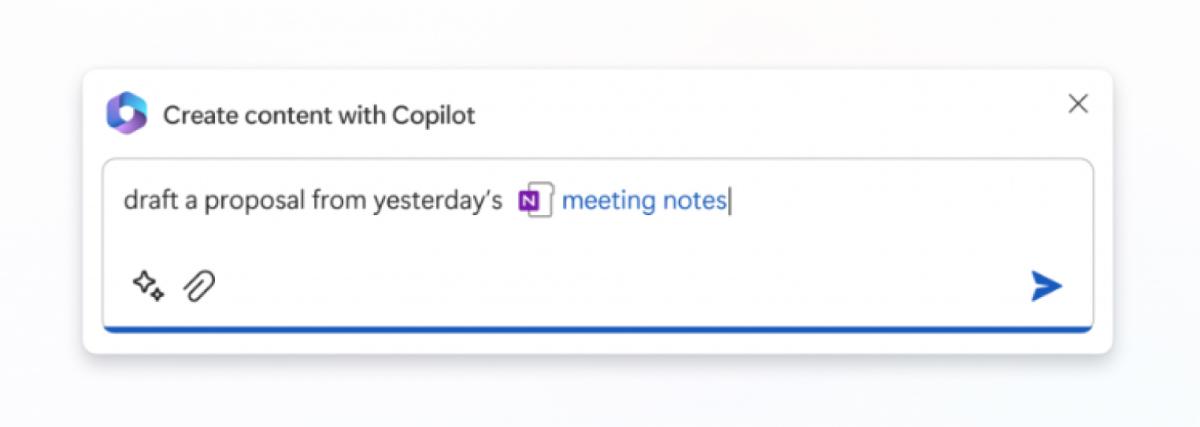

Gen AI can also be less conspicuous and seamlessly integrated into applications you currently use presented as a prompt. Microsoft Copilot, a standard Office 365 platform, will soon be used by public servants daily. We suggest seeking guidance from your Agency's CIO prior to using such a feature. Equally, their expertise can serve to validate alterations to the guidance presented in this page.

Follow the below guidance when using Gen AI tools

Never put sensitive information or personal data into these tools

Refraining from inputting sensitive or personal data into web-based Gen AI tools is essential. This action can result in privacy law violations, legal complications, or potential harm to individuals, groups, organisations, or government entities. Do not enter information that could have adverse consequences. For more information, click here.

Understand usage impact

Be mindful of how your questions and instructions contribute to the learning process of these systems. You may provide inaccurate, biased, or sensitive information that the AI might learn from and generate content that reflects those inaccuracies.

Be aware of prompt sensitivity

Even minor variations in how you phrase, and sequence questions and instructions can yield different responses. Minor deviations like grammatical errors or typos might lead to inaccurate or subpar output. Pay attention to wording and sequencing to ensure the desired results.

Know that the information generated may not be accurate or correct

Recognise that the data generated by Gen AI may only sometimes be accurate or correct. Fact-check all information against a verifiable source. Gen AI can sometimes exhibit a phenomenon called "flattery," where it might provide a response that aligns with your opinion, even if it is an incorrect answer. Additionally, these systems offer varying reactions to the same question, and their answers may come from sources you wouldn't rely on in other situations.

Be aware of bias and context

Remember that the information provided by Gen AI tools can be biased or taken out of context. These tools generate responses by selecting words from a set of options it deems plausible. However, they need help understanding the context or detecting bias. Please exercise caution when relying on these tools' outputs and use your judgment and knowledge to assess the information they produce critically.

Appropriately attribute Gen AI tool usage

Whenever you use data or outputs from Gen AI, whether directly or with slight modifications, it's essential to provide a reference. Indicate that a Gen AI tool was utilised by citing it in footnotes, references, or watermarks.

Know the content complies with intellectual property rights

When generating images, videos, or voice content, ensure that the solution provider provides legal coverage, including fair treatment of artists and respect for intellectual property rights. If you are sourcing voice or images to clone or generate voice, images, or videos, you must obtain the artist's approval for the intended use case.

Ensure you can explain your content

Gen AI provides limited explanation and transparency in its content generation process. When using generated content, conducting extra research to explain the underlying content logic and reasoning is crucial. You are responsible for the content you use, for example, if the content leads to negative consequences not covered by professional indemnity.

Follow Cyber Security NSW generative AI use guidance

Gen AI presents unique new cyber security risks. Please follow this advice found here.

Apply the NSW AI Assessment Framework

Never use Generative AI for any part of an administrative decision-making process until you have applied the NSW AI Assessment Framework.

Examples for appropriate use

For all the following examples, ensure to apply the guidance provided.

Meet Jordan:

Jordan, a government policy analyst, is eager to gain a deeper understanding of how various countries calculate renewable energy production. Jordan turns to Gen AI to assist in this Endeavor.

What should Jordan do?

- Jordan utilises Gen AI to help identify publicly available research papers related to the calculation methods used for renewable energy production in different countries.

- Through Gen AI, Jordan formulates a series of questions to extract valuable insights on the diverse calculation methods employed for renewable energy production in different countries and highlight key differences among them.

- While acknowledging that Gen AI may not always provide completely accurate information, Jordan takes the responsible approach of independently verifying the accuracy of the information obtained.

Meet Alex:

Alex is grappling with the challenge of understanding a dense, publicly available document outlining new environmental regulations.

What should Alex do?

- Alex leverages Gen AI to simplify intricate technical terminology, providing straightforward explanations.

- Alex asks Gen AI to provide a summary of vital insights, highlighting compliance requirements, penalties, and implementation timelines.

- Recognising the potential for inaccuracies, Alex takes a prudent approach by consulting with experts from the legal and regulatory department to validate the interpretation and understanding.

Meet Joon:

Joon is tasked with creating a training module on policy writing, even though Joon is an expert in policy writing, Joon lacks experience in developing training material.

What should Joon do?

- Joon starts by confirming the objectives of the training module and utilises Gen AI to generate topic ideas.

- After generating ideas, Joon validates them with the internal team and then employs Gen AI to propose the structure and content of the policy.

- Joon takes the time to carefully review and refine the generated content, ensuring that it aligns perfectly with the training objectives and does not breach intellectual property rights.

- Joon efficiently produces a well-structured training module on policy writing, starting with topic ideas and content suggestions generated by Gen AI. Gen AI serves as the foundation, and Joon thoughtfully reviews and refines it to precisely align with the training objectives.

Examples for inappropriate use

Never put sensitive information or personal data into these tools:

Meet Rajesh:

Rajesh, a policy officer, has been assigned the important task of revising an official government policy document. Recently returning from a leave of absence, Rajesh is confronted with a pressing deadline to complete this update. Considering the time constraints, Rajesh is contemplating the utilisation of Generative AI (Gen AI) to expedite and streamline the process.

What should Rajesh do?

- Rajesh acknowledges that the document contains sensitive personal and health-related information.

- Rajesh is aware that one should never input sensitive content into an AI tool.

- Rajesh decides not to use Gen AI for the official policy document to ensure the confidentiality and security of individuals. In such cases, traditional human-driven processes offer greater control, accuracy, and protection of sensitive information.

Meet Aisha:

Aisha is a dedicated government financial analyst responsible for preparing annual financial reports that outline departmental budgets and expenditures.

What should Aisha do?

- Aisha recognises that Gen AI, while powerful in various contexts, lacks the security and specialised expertise required to comprehend complex government accounting practices. Moreover, it carries the risk of introducing errors in budget allocation and financial analysis.

- Consequently, Aisha makes the prudent decision to abstain from using Gen AI for this specific task.

- Instead, Aisha opts for traditional, human-driven processes. This choice not only safeguards government data but also ensures the production of accurate financial reports. It upholds transparency and facilitates informed decision-making.

Meet Alex:

Alex frequently relies on Gen AI to generate content for presentations and documents. Recently, Alex was tasked with generating website images to represent a new community park.

What should Alex do?

- Alex recognises the importance of ensuring that the stock images generated by Gen AI have been approved for use by the respective artists.

- After reviewing the Gen AI policy, Alex finds it unclear whether artists are being treated fairly and whether their intellectual property rights are being respected.

- Alex consults the legal team to clarify this matter and ultimately decides to engage a third-party marketing team to source the images ethically and responsibly.

Final note

As with all digital systems, users are responsible for their own actions and are reminded of their obligations. Users must comply with all applicable legislative requirements and laws. Any collection, storage, use, and disclosure of information must comply with the Privacy and Personal Information Protection Act 1998 (PPIP) and the Health Records and Information Privacy Act 2002 (HRIP).

Additional acts and regulations that promote the protection of personal and health information in NSW is available in the NSW AI Ethics Policy.

Note: ChatGPT was utilised to suggest improvements to sentence structures, grammatical errors, sentence flow and clarity. The content in this guideline is based off publicly available industry research and best practices.

If you are generating AI frameworks, guidelines, procedures, or policies, please connect with us.